Master Prompts Like a Pro in Minutes

How Prompt Engineering Works

Prompt engineering designs and optimizes prompts for language models. It’s important in NLP and language generation. Prompt formats guide the model and can be used for tasks like product descriptions or conversational AI. Reliable prompt formats exist, but exploring new formats is encouraged.

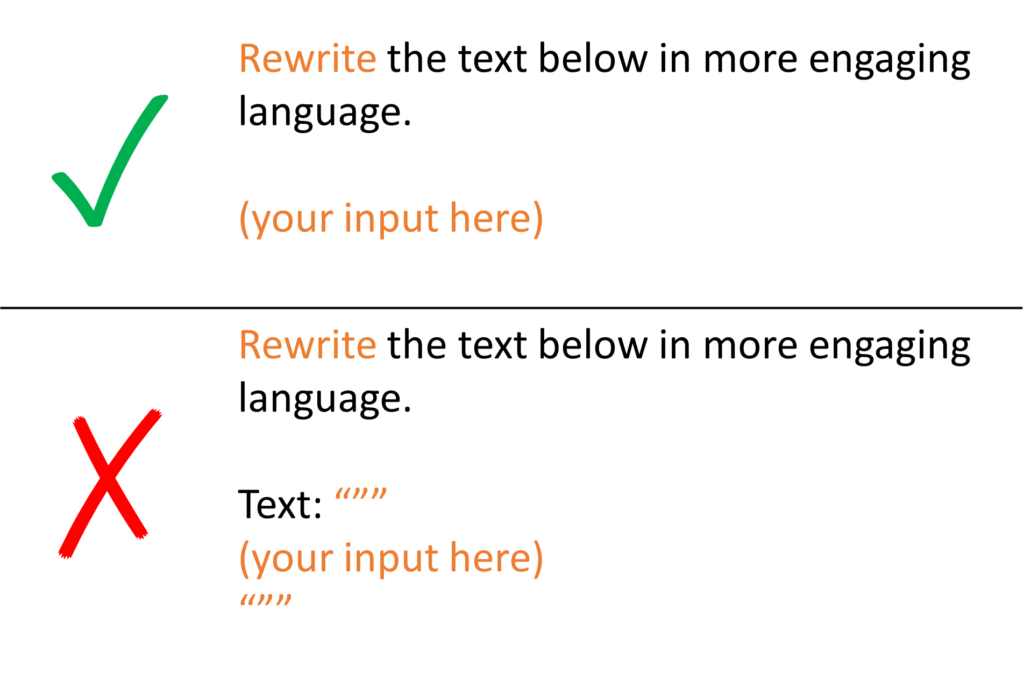

“(your input here)” is a placeholder for text or context.

Rules of Thumb and Examples

Rule #1

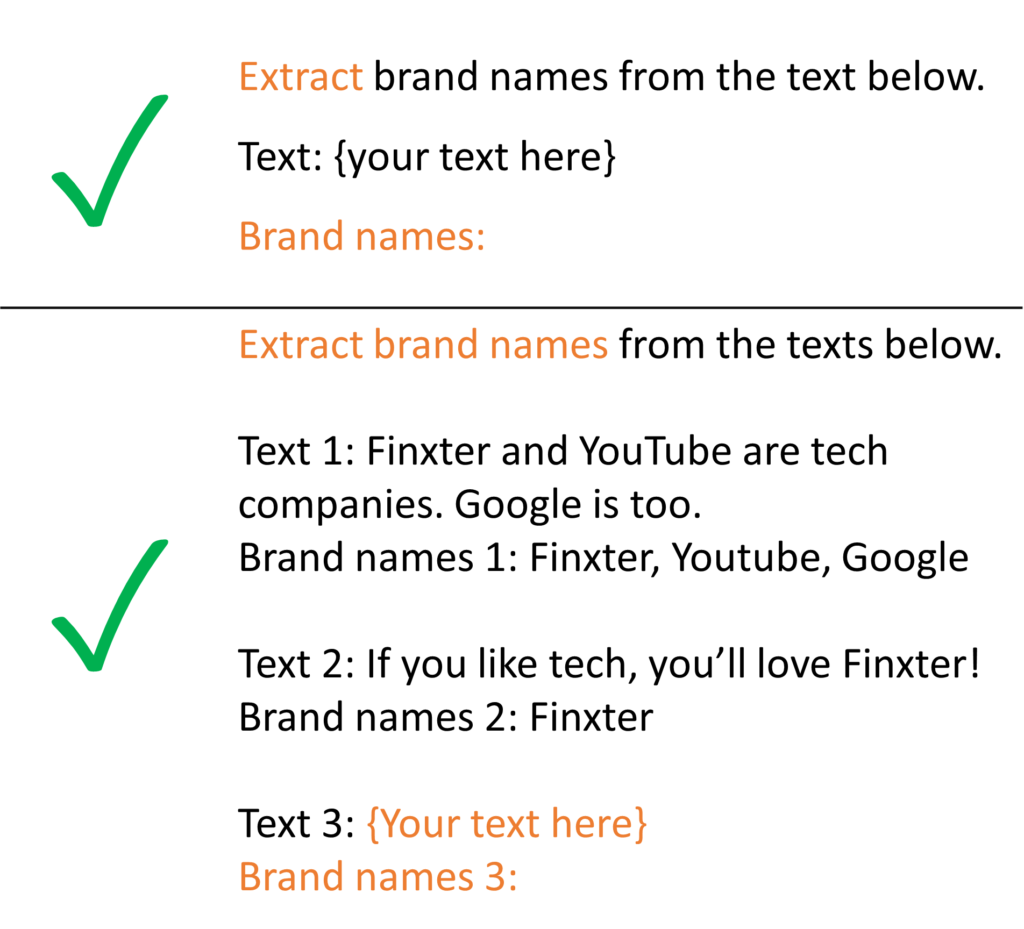

Instructions at beginning and ### or “”” to separate instructions or context

Rule #2

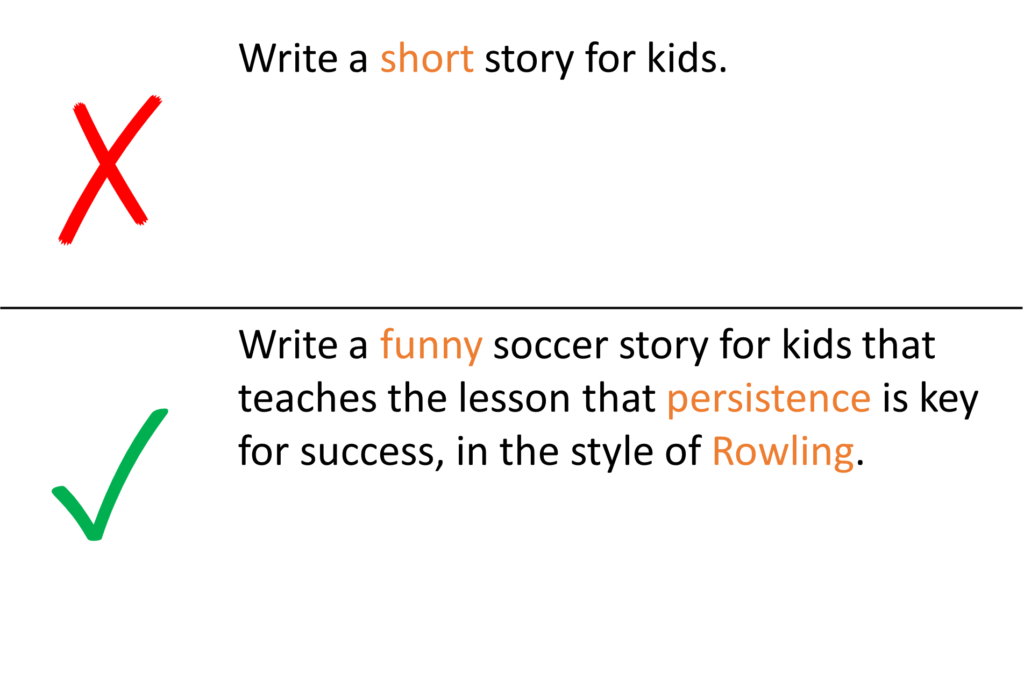

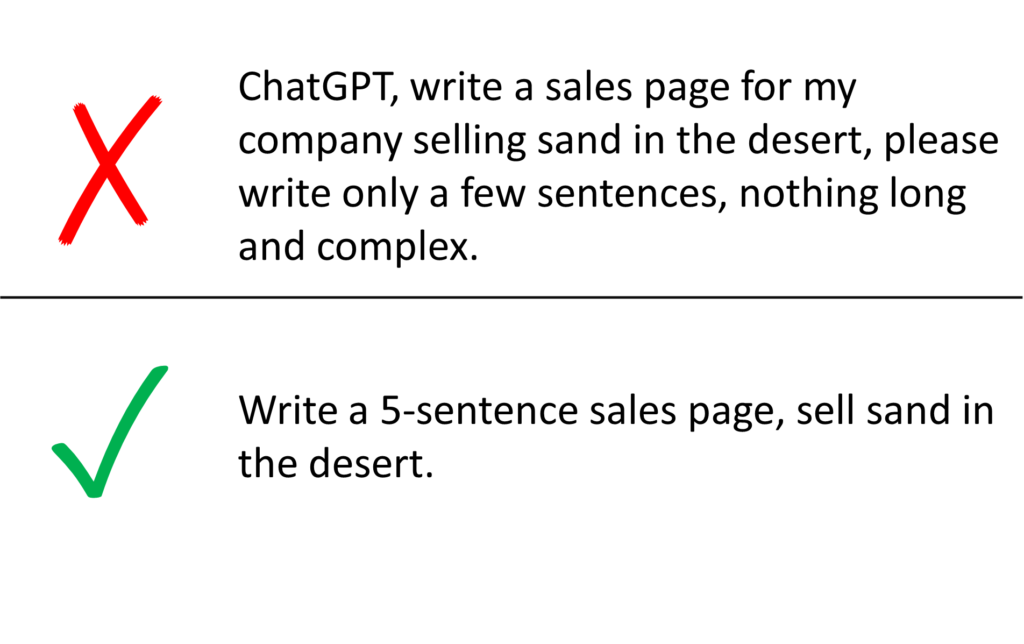

Be specific and detailed about the desired context, outcome, length, format and style.

Rule #3

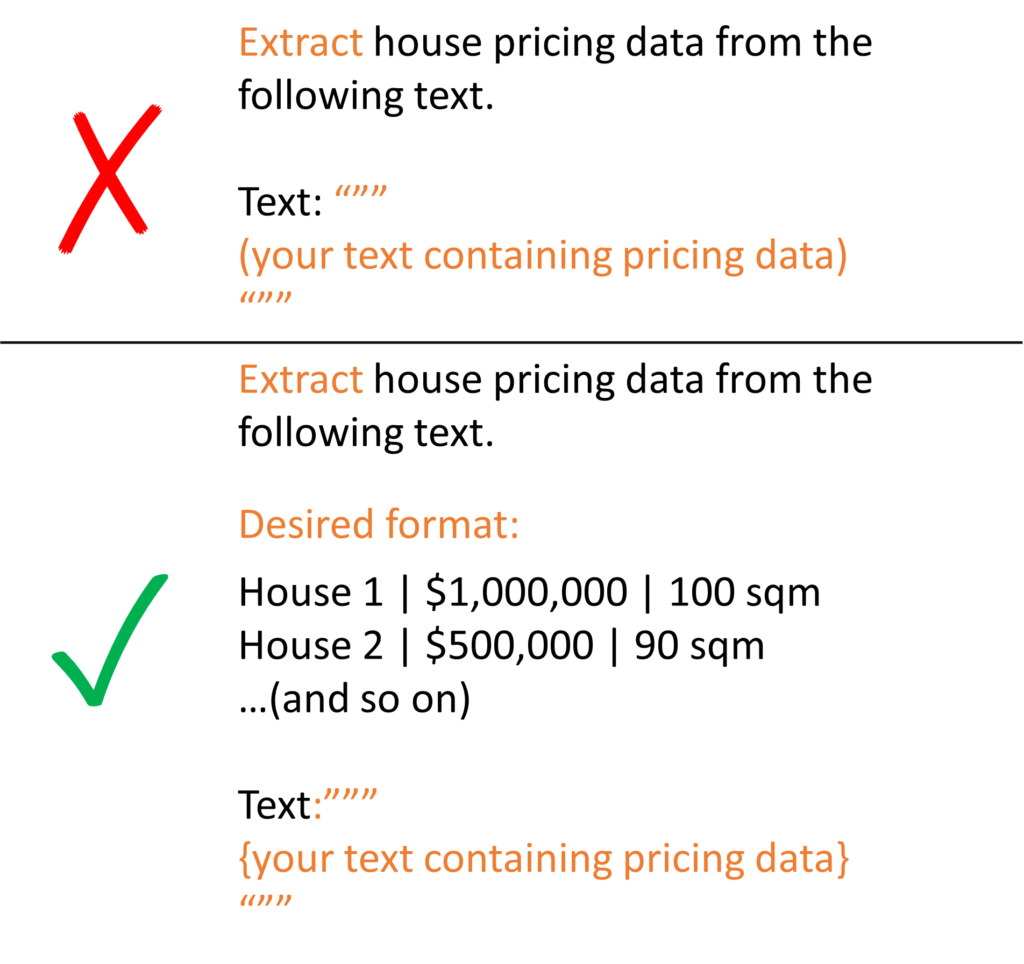

Give examples of desired output format.

Rule #4

First try without examples, then try giving some examples.

Rule #5

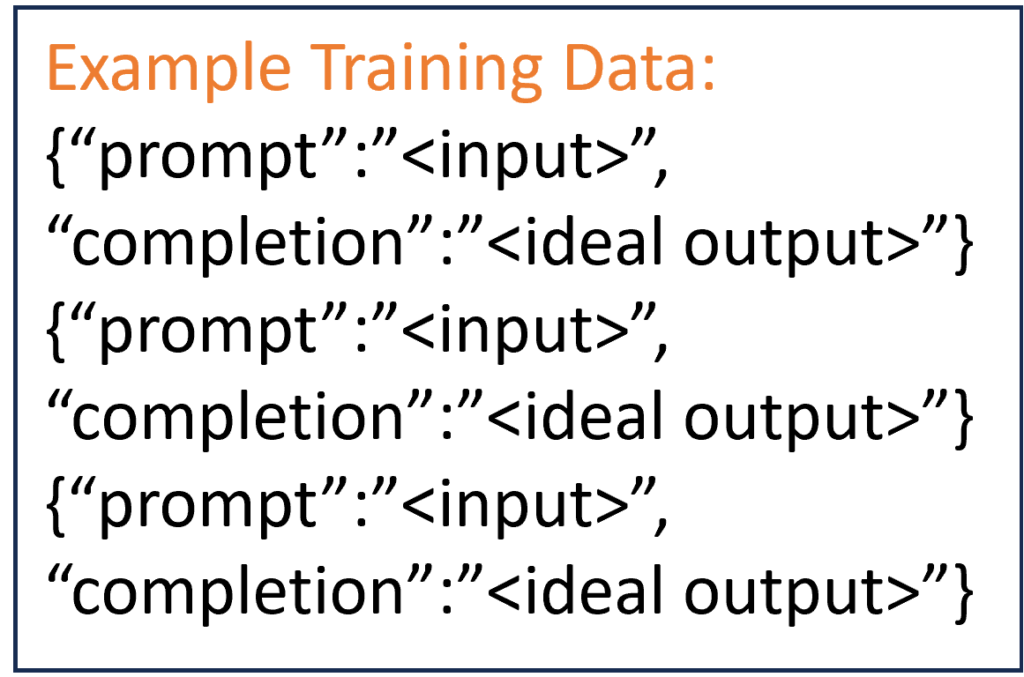

Fine-tune if Rule #4 doesn’t work.

Fine-tuning improves model performance by training on more examples, resulting in higher quality results, token savings, and lower latency requests.

GPT-4 can intuitively generate plausible completions from few examples, known as few-shot learning.

Fine-tuning achieves better results on various tasks without requiring examples in the prompt, saving costs and enabling lower-latency requests.

Rule #6

Be specific. Omit needless words.

Rule #7

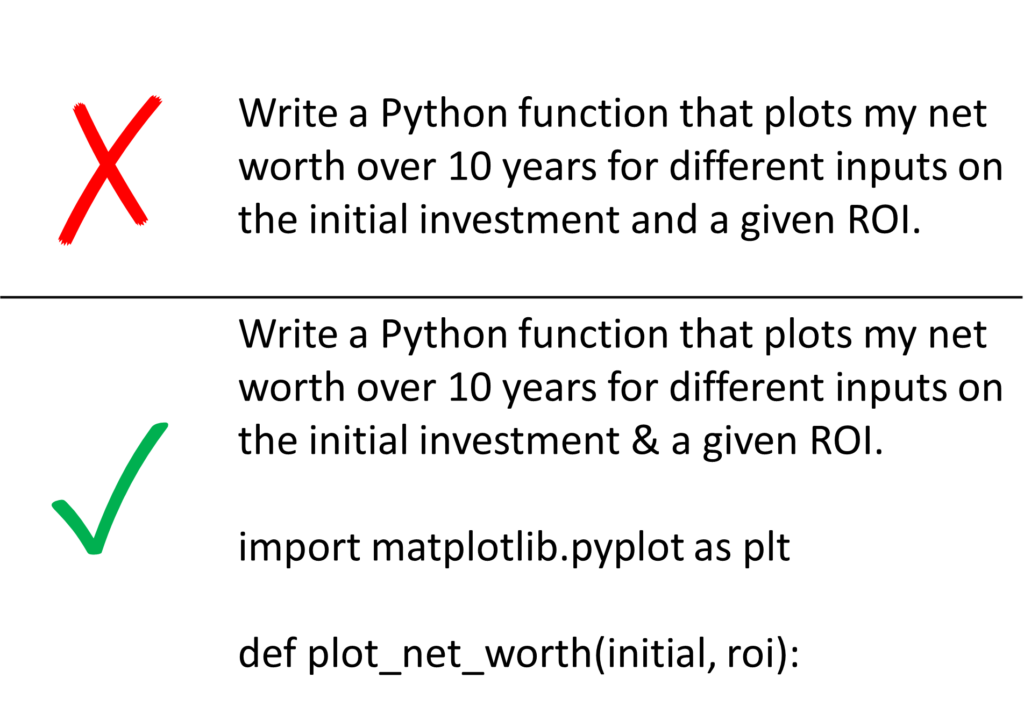

Use leading words to nudge the model towards a pattern.

Frequently Asked Question

Prompt engineering is the practice of designing and optimizing prompts to guide language models for various tasks, such as product descriptions or conversational AI.

1) Start instructions at the beginning, and use separators like ### or """ for clarity.

2) Be specific and detailed about the desired context, outcome, format, and style.

3) Provide examples of the desired output format.

4) Experiment with few-shot learning by trying prompts with and without examples.

5) Fine-tune the model for improved performance and cost efficiency.

6) Be concise and avoid unnecessary words.

7) Use leading words to direct the model’s response pattern

Few-shot learning is the ability of a model like GPT-4 to intuitively generate high-quality completions using only a few examples.

Fine-tuning is ideal when initial attempts with or without examples don’t yield desired results. It improves quality, reduces token usage, and ensures lower-latency requests.

Specificity ensures the model understands the context and delivers accurate and relevant responses. It also helps reduce ambiguity and unnecessary tokens.

Common separators include ### and """. These help clearly delineate instructions from the context.